UX for Agentic AI: Transforming Users into Team Managers

- olivermorris83

- Nov 18, 2024

- 4 min read

Updated: Nov 27, 2024

Agentic AI is transforming how we interact with technology, moving us from the role of "users" to "managers."

But the 'AI native' user interface (UI) is still an unwritten page in the UX handbook.

Unlike traditional SaaS, where users execute tasks directly, Agentic AI empowers us to delegate work to intelligent agents. But with this shift comes a new challenge: creating AI native user interfaces (UI) that enable effective management.

That challenge is not always being met. Users find themselves disappointed with tools which tick nearly all the boxes but have not empowered them with this role change.

So, what does it mean to manage a team of AI agents?

What is the UI to support that new role?

Let's explore the subject and see examples in today's products.

The New Role: From Employee to Manager

Traditional SaaS applications, like CRM software, are designed for users as employees. Users log in, access data, and perform tasks themselves. With Agentic AI, the paradigm flips: you’re not executing the task, you are managing the team who execute it.

User's primary tasks as a manager:

Give clear instructions:

Just like briefing a team, users must design prompts that guide agents effectively. Tools frequently fail to satisfy because of this.

Evaluate outputs:

As with any team, success depends on approving quality output, monitoring results, providing feedback, and iterating. No feedback = no improvement.

Improve workflows:

Managing agents is an about process optimisation. Too many agents is expensive and confusing. Too few may yield low quality results.

Think "Digital Interns," Not "Robot Overlords"

Before we proceed, let's allay a common concern. AI agents are interns to assist in your work. As interns they are eager new team members simultaneously full of potential and prone to misunderstanding.

The key is understanding that they aren't replacing user's expertise – they're amplifying it. When an intern drafts a report, the managing user reviews and refines it. When an AI agent processes data, the manager interprets and applies it.

There are two forces in out favour:

When resources become more available we simply use more of it (Jeavon's Paradox)

Energy is the common example, but intelligence is the same

None of the AI tools I build would replace me. They do tasks which would not have been done otherwise

AI struggles with easy tasks and excels at hard tasks (Moravecs Paradox)

The classic example is that computers have dominated in chess since 1997, but cannot manage people to a deadline.

This is good news, is suggests partnership will be profitable

Agentic AI Examples

There are many agentic AI platforms, here's a few to get familiar with:

We'll look at UI examples from OpenAI, Cassidy, GodModeHQ and Zapier, as these are low code tools directed towards users, not developers. They are examples of good practice, constantly working to improve. Then we can discuss what they are trying to improve into.

What Agentic AI Gets Right: Current UI Strengths

Agentic AI interfaces have already started embracing this managerial mindset. Here are some highlights:

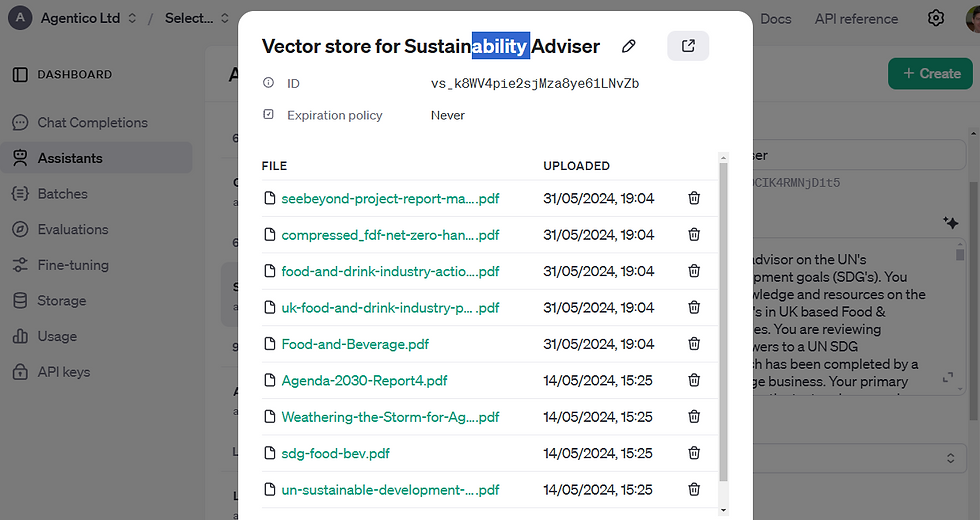

Chat Interfaces with Own Documents (RAG):

Users can easily build chatbots with their own materials.

Workflows for Agents:

Seamlessly design workflows for agents to follow, automating complex tasks.

Integrated Tools for Agents:

Agents can access external systems like CRMs or databases.

This is Zapier, Make.com and n8n's focus, see example of chatbot with email below

Table-Based Data Sources:

Agents iterate over records in a table, processing or enriching as they go—imagine running a leads funnel over thousands of contacts with a single setup.

Agents researching a list of records, one by one

Prompting with Variables:

Variables allow users to create prompts that refer to each record in a table of data

These features represent significant progress in empowering users to manage agents effectively. But something is missing...

Mind the UX Gap

Despite these strengths, many Agentic AI interfaces fall short in two critical areas. Both of these reduce the quality of the agent's work, users can feel disappointed by performance.

Memory & Learning from Experience

Agents must improve over time, yet most systems lack tools for managing memories or pretraining in test environments.

Imagine onboarding a human employee without giving them past context or a chance to train—agents need this too.

Evaluation and Feedback

Agents cannot improve without feedback.

Systems need robust evaluation tools to score outcomes or batch-test workflows.

These tools are essential for iterating towards better performance

Team work

Team managers like to work with their managers and human team members

But we need versioning for editing prompts + workflows with teams (as per team work in Miro, Lucidchart, Lex)

Seen It All Before?

We have team management software already; brain-storming, kanban task management, flowcharted workflows and calendar scheduling:

It just needs a few tweaks for working with agents and we're there...

Designing for the Managerial Era: A Call to Action

For designers, the challenge is clear: build UIs that support effective management. Here’s what that looks like:

Simplified prompt design

Intuitive interfaces for crafting, enhancing and testing performant prompts

Evaluation dashboards

Clear metrics for monitoring agent performance and providing feedback

Learning tools

Enable agents to adapt and improve through managed memories

The businesses that embrace this shift will lead the way in the Agentic era. By rethinking UX for a world where we all manage teams of AI, they’ll unlock new levels of adoption.

Agentico is among the first Agentic AI advisors in the UK, supported by 10 yrs in Machine Learning and 20yrs in analytics. Make sense of, and leverage, the seismic changes that AI agents are bringing to marketing or your industry. We're happy to talk more about the opportunities of Agentic AI and ML at your organization or event. Get in Touch Agentico.ai: AI with humans in mind |

Comments